This Will Be On The Test

I like games that work. The occasional glitch is understandable, but all the funny ones that people make YouTube videos about are opt-in and not game-breaking.

This is why I'm fairly deliberate in writing software; the way I see it, a buggy release (even in WIP builds) hinders my goal of getting feedback on the most important parts of the game. Bugs are cheaper and faster to prevent (or at least catch early) than they are to put off. If I'm going to a playtesting session expecting to hear about the same bugs over and over, I should probably stay home that night and fix them. Of course, preventing them in the first place is also great, and today I'll talk about how I do that.

Automated Testing

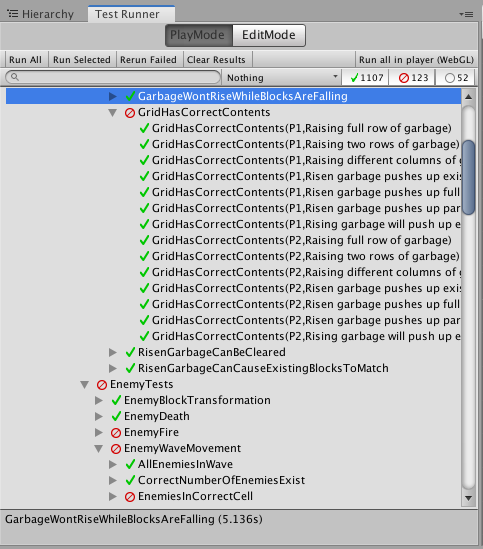

When I say "automated testing," I'm referring to a big checklist of things that I expect my code to do. Like this:

Well, actually, not "like this." Exactly this. Out of 1282 ways I anticipate the game to break, my code stands up to 86% of them! Room for improvement, but not bad.

1282 seems like a lot, but most of those tests are parameterized; i.e. I use the same chunk of code but provide different numbers. Here's an example:

These tests help me ensure that the player dies the way I expect them to. All of the tests shown in the GIF are running on the same code, but on different data (specifically the contents of the grid).

Different Kinds of Tests

Software testing is a big field. Big enough to have its own Wikipedia category, even. That's because interesting software is complicated enough that you have to be choosy about what to test. Kent Dodd's article was a big inspiration to my approach; generally, I pick things to test that are either critical to the game or whose underlying code I expect to change.

The majority of my test are what you'd call integration tests; they help me affirm that different parts of the code work together as I expect them to. In practice, that comes in the form of setting up the game world and simulating it for a brief time. However, no amount of automated testing will tell me if Chromavaders is fun to play, or if there are gaping holes in the design*; I still need people (you?) for that. That's called playtesting, and my experience doing that is best left for another article.

*...but if someone's researching this, please feel free to suggest a paper for me to read. Maybe I should've done this for grad school?

Why?

Ultimately, automated tests help me answer these questions:

- How well does the code handle weird situations?

- If I improve part of the code, will the behavior change?

- Do these very specific game mechanics work as I promise they do?

I can't assume that players will play Chromavaders exactly how I want them to. But whatever they do shouldn't crash the game or otherwise make it unplayable.

Get Chromavaders

Chromavaders

80's-style arcade puzzle shooter mashup.

| Status | In development |

| Author | Corundum Games |

| Genre | Puzzle, Action |

| Tags | 2D, 8-Bit, Arcade, blocks, mashup, Pixel Art, Retro, Shoot 'Em Up |

| Languages | English |

| Accessibility | Color-blind friendly |

More posts

- Play(test)ing to WinSep 27, 2019

- My Disappointing Automation ExperienceAug 31, 2019

- New Release Available!Aug 25, 2019

- Why I Played at Play NYCAug 15, 2019

- Introducing ChromavadersAug 09, 2019

Leave a comment

Log in with itch.io to leave a comment.